Along with the rapid innovation and transformation of industries around the world, there’s a tremendous rise in the required AI professionals. While you might be a keen AI engineer, there’s a high chance that you will become a recruiter screening the applicants. So, everybody out there should be updated about the most prominent AI interview questions. Here’s the list of top AI interview questions with answers for 2024. This list is to get you well-prepared for any kind of AI interview- from the basic concepts to the advanced topics such as deep learning and reinforcement learning.

Easy AI Interview Questions

What is Artificial intelligence (AI)?

Artificial Intelligence is a technology that helps you to effectively generate, analyze, and perform simple and complex tasks that require human intelligence. Body parts of AI are algorithms, data, and most importantly computational power.

AI can be used in Automation, Data Analysis, Personalization, Healthcare, Natural Language Processing, Image and Video Analysis, Predictive Analytics, Gaming, Robotics, and Education.

AI Use Cases: Business Intelligence, Application Development, Self-driving Cars, Virtual Personal Assistants, and Customer Service Chatbots.

How is AI different from Machine Learning (ML)?

Explain the difference between supervised and unsupervised learning.

🔍 Picture yourself on a treasure hunt. You can play the game in two ways:

Supervised Learning: You get a treasure map! 🗺 Here, you know the locations of some treasures (answers) already. Your task is to spot patterns to find the rest. The map gives you directions (labeled data) pointing you to the right spots. The algorithm learns from these labeled examples to predict where future hidden treasures might be. It’s like having a smart guide who’s always teaching you the tricks!

🛠 Examples: Catching spam emails and guessing house prices.

Unsupervised Learning: No map just gut feelings! 🧭 In this case, you don’t get a map or clues—the landscape. You need to check out the island by yourself and guess where treasures might be hiding. The algorithm searches for secret patterns and structures without any tags or guidance. It’s similar to being a detective, spotting hidden groups of treasures based on what’s alike (or what stands out).

🛠 Examples: Grouping customers spotting fraud.

To sum up:

- Supervised Learning = You work with a map (labeled data) so you know where some treasures are and learn from that.

- Unsupervised Learning = You explore unknown territory (unlabeled data) to find hidden gems on your own!

Both lead to treasure, but the journey differs! 🏝✨

Relevant: Top AI Interview Tools to Help You Nail Your Next Job Interview in 2024

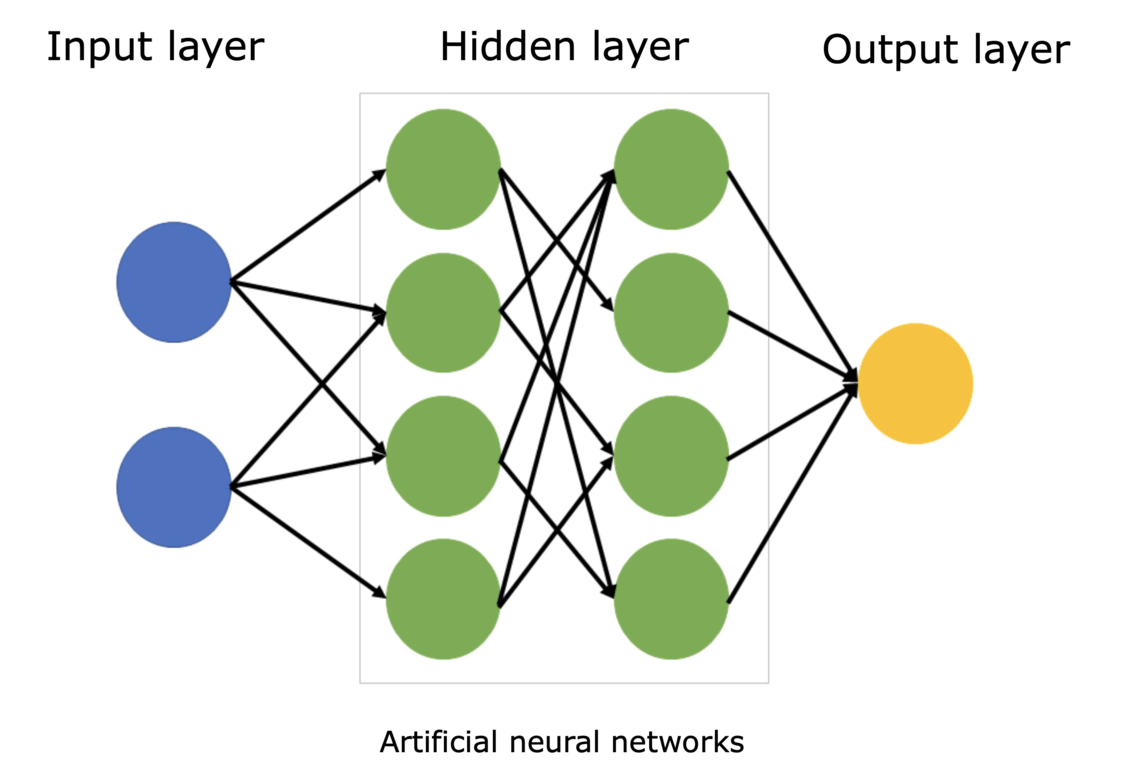

What are neural networks?

Neural networks are machine learning algorithms that draw inspiration from how the human brain works. They consist of linked nodes, or fake neurons, that handle information much like real neurons do.

How do neural networks work?

- Input Layer: This is where the data for processing enters. Each neuron in this layer stands for a feature of the input data.

- Hidden Layers: These layers handle the input data and pull out key features. They learn complex patterns and links within the data.

- Output Layer: This layer creates the end result or guess based on the processed info from the hidden layers.

What can neural networks do?

Neural networks have many uses such as:

- Image recognition: Spotting things, people, or places in pictures

- Natural language processing: Making sense of and creating human speech

- Speech recognition: Turning spoken words into written text

- Medical diagnosis: Looking at medical scans and info to spot illnesses

- Financial forecasting: Guessing stock values or money trends

- Recommendation systems: Offering products or services based on what users like

Types of neural networks

Neural networks come in different forms, each with its own setup and skills:

- Feedforward neural networks: The simplest kind of neural network where data moves one way from the input layer to the output layer.

- Recurrent neural networks: These networks have feedback loops letting them handle data that comes in a sequence, like time series or natural language.

- Convolutional neural networks: Made to work with grid-like data such as images or audio, they excel at jobs like spotting objects and sorting images.

- Generative adversarial networks (GANs): These networks have two neural networks that compete with each other – a generator and a discriminator – working together to create lifelike data.

Neural networks are a strong tool for solving tricky problems and causing a revolution in many areas. As tech keeps moving forward, we can expect to see even more groundbreaking ways to use neural networks down the road.

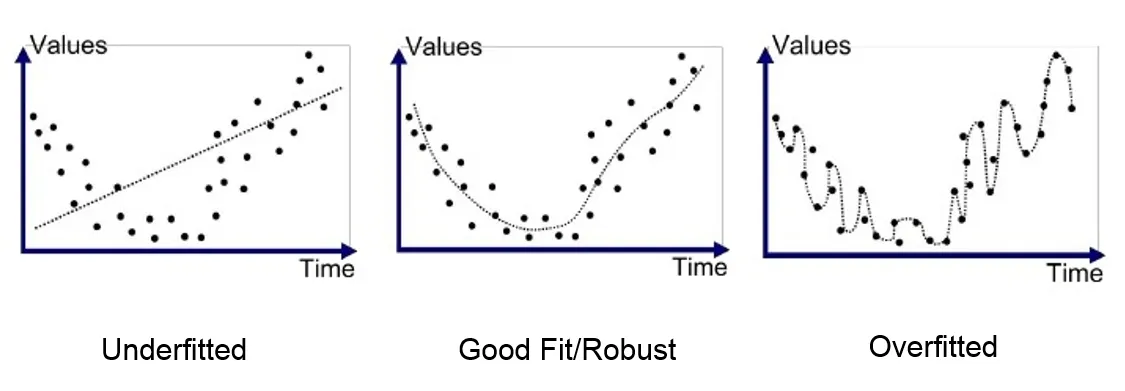

Define overfitting and how to prevent it.

Overfitting happens when a machine learning model gets too complicated and learns the training data too well so it has trouble working with new unfamiliar data. This can result in bad performance in real-world jobs.

Reasons for Overfitting

- Complex model: A model with too many settings or layers can remember the training data instead of learning the main patterns.

- Not enough data: If the training dataset is too small, the model might overfit to the few examples it has.

- Messy data: Outliers or noisy data can mix up the model and lead to overfitting.

Ways to Stop Overfitting

1. Regularization:

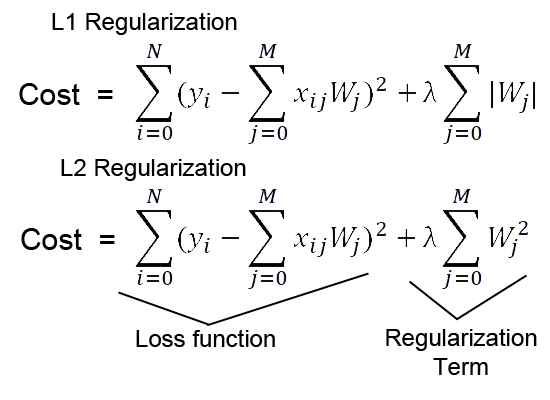

- L1 regularization: Has an impact on the loss function by adding a penalty term. This leads to sparsity, which means a lot of model parameters end up as zero.

- L2 regularization: Adds a penalty term to make parameter values smaller. This helps to reduce how complex the model is.

2. L1 and L2 regularization

3. Early stopping:

- Keep an eye on how well the model performs with a validation set as it trains.

- End training when errors in validation start to climb, which stops the model from fitting too to the training data.

4. Data augmentation:

- Make more training data by changing existing data. You might rotate, flip, or resize images.

- This helps the model learn better by showing it more diverse examples.

5. Cross-validation:

- Split the data into several parts and train the model on different subsets. Then, test its performance on the part you left out.

- This lets you check how well the model can apply what it learned to new data and spot if it’s overfitting.

6. Feature engineering:

- Pick and craft key features to steer clear of adding extra or messy data that might cause overfitting.

7. Ensemble methods:

- Mix several models to lower the chance of overfitting. Approaches such as bagging and boosting can boost how well the model performs overall.

When you grasp what leads to overfitting and put these prevention methods into action, you can create machine learning models that are more reliable and do well with data they haven’t seen before.

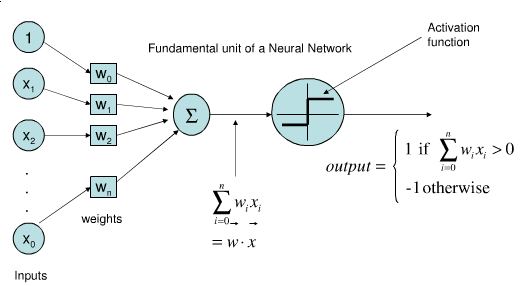

What is a perceptron?

A perceptron is one of the very basic computational elements out of which the basis of artificial neural networks forms. It is a structure based on the biological neuron and can perform a classification task between two classes.

How does a perceptron work?

- Inputs: A perceptron receives several inputs, and each of them represents one feature from the data.

- Weights: Each of the inputs is multiplied by a specific weight, representing how important this input is in making the decision.

- Summation: Inputs multiply their weights and are summed up.

- Activation function: A summation is passed through an activation function, which is going to introduce non-linearity to the equation and actually determine the output of the perception.

Types of Activation Functions

- Step function: Outputs 1 if the sum is greater than or equal to a threshold, and outputs 0 otherwise.

- Sigmoid function: Outputs a value between 0 and 1, with a nice smooth curve.

- ReLU (Rectified Linear Unit): Outputs the maximum of 0 and the input, again introducing non-linearity but without saturating.

Limitations of perceptrons

A perceptron can only be capable of solving problems that are linearly separable, meaning that based on the information provided in the given data a clear straight line exists that separates the classes.

Simple in complexity: Perceptrons are quite simple and thus can never be able to learn complicated patterns.

Building blocks called Perceptrons

Perceptrons are limited to a single unit. However, they do provide a basis upon which more complex neural nets are built. When several perceptrons are combined in layers, much more powerful models are produced that can learn very complex patterns and solve many different sorts of problems.

Moderate AI Interview Questions

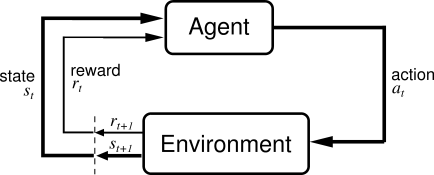

What is reinforcement learning?

Reinforcement learning is a type of machine learning where an agent learns to interact with an environment by trial and error, maximizing a reward signal. It’s like teaching a dog new tricks using treats and punishments.

Key Components: Agent, Environment, State, Action, Reward

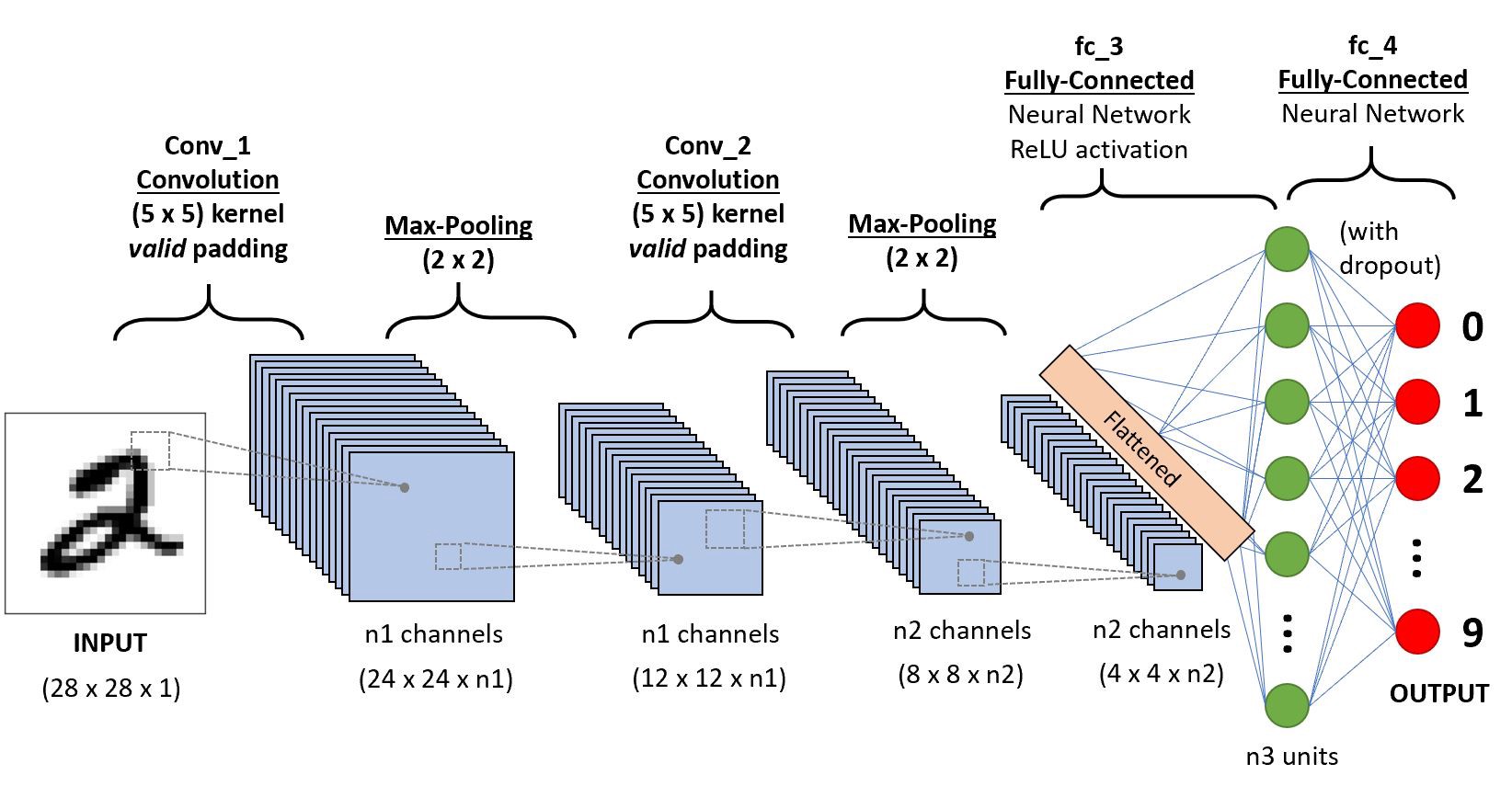

Explain the working of a convolutional neural network (CNN).

Convolutional Neural Networks, or CNNs for short, is a neural network designed specifically to be operated on two-dimensional data, viewed as grids, such as images. The CNN successfully applies to image recognition, object detection, as well as tasks in natural language processing.

Key Components:

- Convolutional Layers: These layers apply filters over the input data to extract the characteristics of data.

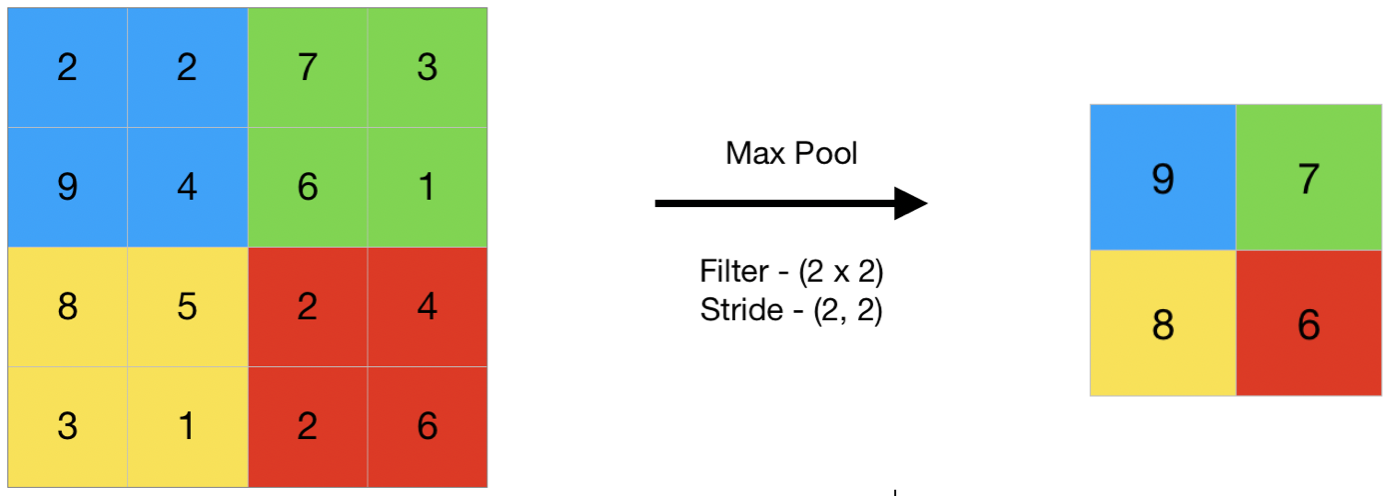

- Pooling Layers: To reduce the computational cost, these layers downsample the feature maps that capture important information.

- Fully Connected Layers; Here, these layers look similar to the existing neural networks of traditional nature, which include the combination of the characteristics extracted for generating the final output.

How CNNs work:

- Input: The CNN accepts an input image, mostly presented as a 3D tensor (height, width, channels).

- Convolution: The layers of a convolutional network apply filters to the input image that slide it over the image and compute dot products. Each filter produces a feature map. Opens in a new window towardsdatascience.com convolutional layer in a CNN

- Pooling: The pooling downsamples the feature maps, thereby reducing the dimensionality while retaining the relevant information. Opens in a new window www.geeksforgeeks.org pooling layer in a CNN

- Fully Connected Layers: In fully connected layers, the flattened feature maps are passed through various layers combining the features extracted to produce the final output, which may be classification or regression results.

Applications:

- Image recognition: Recognize objects, people, and scenes in images.

- Object detection: Locate and classify objects within an image.

- Image segmentation: Partitioning of images into different regions based on content.

- Natural language processing: Processing text data, such as sentiment analysis or machine translation.

CNNs have revolutionized various fields due to their ability to automatically learn and extract meaningful features from complex data.

Relevant: Is AI Good for Interview Preparation?

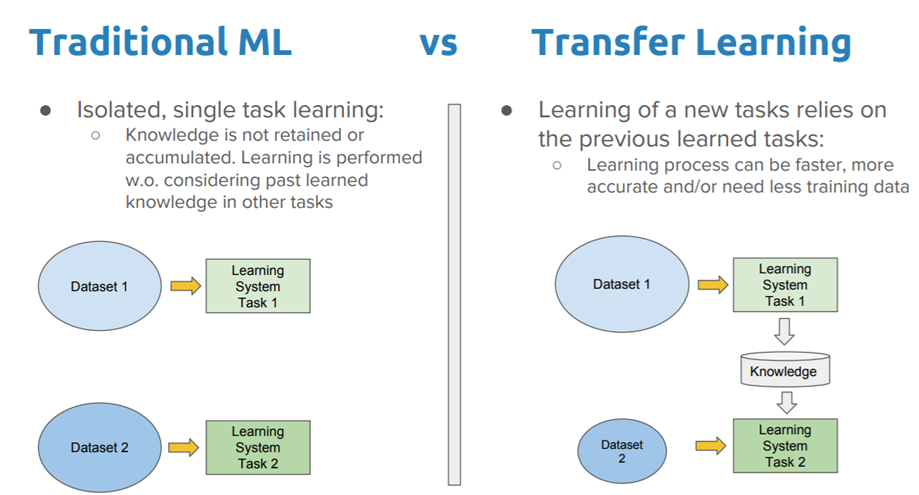

Differentiate between Traditional ML and Transfer Learning.

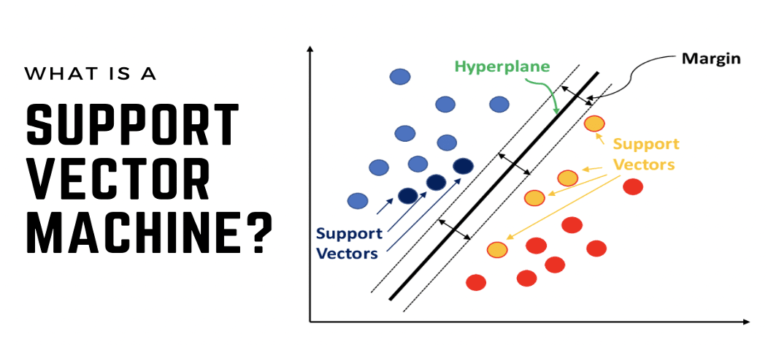

What is a Support Vector Machine (SVM)?

A supervised machine learning algorithm that finds the optimal hyperplane to separate data points into two classes.

Process:

- Find the hyperplane that maximizes the margin between the two classes.

- Support vectors are the data points closest to the hyperplane.

Advantages:

- Effective for high-dimensional data.

- Robust to outliers.

- Can handle both linear and nonlinear separation.

Disadvantages:

- Can be computationally expensive for large datasets.

- Sensitive to feature scaling.

Advanced AI Interview Questions

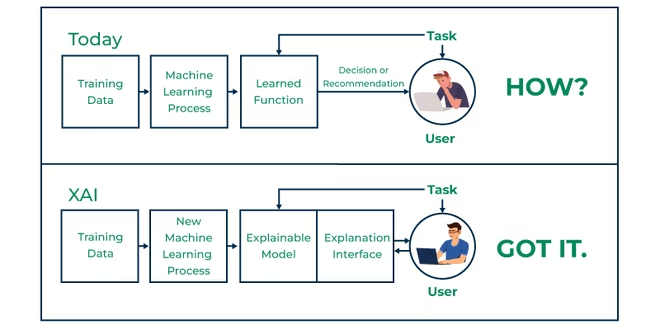

What is Explainable AI (XAI)?

A field of AI that focuses on making machine learning models more understandable and interpretable to humans. The goal of XAI is to improve transparency, trust, and accountability in AI systems.

Key Techniques:

- Feature importance: Identifying the most influential features in a model’s decision-making.

- LIME (Local Interpretable Model-Agnostic Explanations): Creating simple, local explanations for complex models.

- SHAP (Shapley Additive Explanations): Attributing the contribution of each feature to the model’s prediction.

- Visualization: Creating visualizations to help humans understand model behavior.

Benefits:

- Improved trust: Users can better understand and trust AI systems.

- Enhanced decision-making: Explainable AI can help humans make informed decisions.

- Regulatory compliance: Adherence to regulations like GDPR and HIPAA.

Challenges:

- Complexity of models: Some models are inherently difficult to explain.

- The trade-off between accuracy and explainability: Increasing explainability may sometimes compromise accuracy.

- Lack of standardization: There is no universally accepted standard for explainability.

XAI is a rapidly evolving field with the potential to revolutionize the way we interact with and rely on AI systems.

What is the curse of dimensionality in AI?

The Curse of Dimensionality is a phenomenon encountered in machine learning when dealing with high-dimensional data. As the number of features or dimensions in a dataset increases, the data points become increasingly sparse, making it difficult for algorithms to find meaningful patterns or relationships.

Challenges:

- Data Sparsity: Data points become widely spread out, making it harder to identify clusters or boundaries.

- Computational Complexity: Algorithms become computationally expensive as the dimensionality increases.

- Overfitting: Models may become too complex and overfit the training data, leading to poor generalization.

- Distortion of Distance Metrics: Traditional distance metrics become less reliable in measuring proximity between data points in high-dimensional spaces.

Mitigating the Curse of Dimensionality:

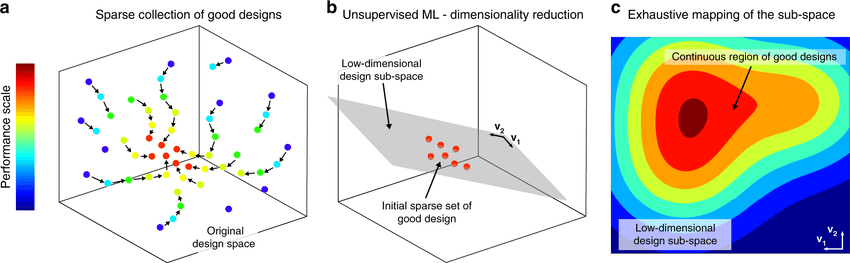

- Dimensionality Reduction: Techniques like Principal Component Analysis (PCA) and t-SNE can reduce the dimensionality of data while preserving important information.

- Feature Selection: Identifying and selecting the most relevant features can help mitigate the curse of dimensionality.

- Ensemble Methods: Combining multiple models can improve generalization and reduce overfitting.

- Careful Model Selection: Choosing appropriate algorithms and techniques that are suitable for high-dimensional data is crucial.

By understanding the curse of dimensionality and employing effective strategies to address it, machine learning practitioners can improve the performance and reliability of their models, especially when working with large and complex datasets.

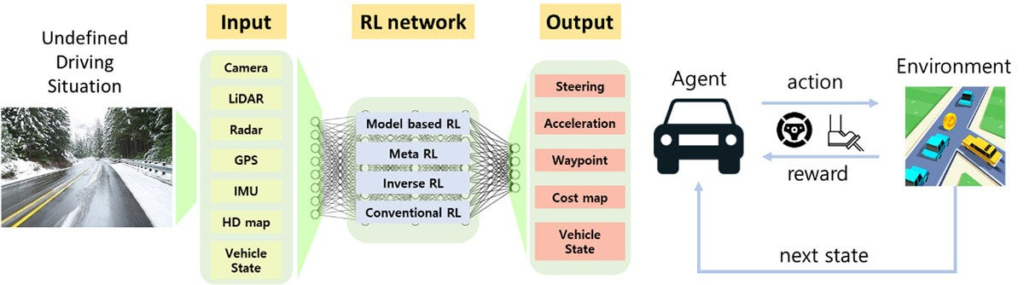

How does reinforcement learning apply to self-driving cars?

One of the most powerful machine learning techniques used for developing self-driving cars is the RL. The ability for agents to learn on trial and error by interacting with a complex environment, getting rewards or punishments based on their actions, makes it well suited for training self-driving cars when they need to decide whether or not to accept the challenges posed by complex dynamic environments.

Key applications of RL in self-driving cars:

- Planning paths: This may be to learn optimal paths toward traveling through various roads and road conditions and traffic situations.

- Decision-making: These include deciding whether to accelerate, brake, or change lanes, for instance.

- Optimization of control for traffic lights such that they optimize their timing for constant traffic flow, cutting congestions.

- Autonomous parking: RL can enable the learning of how cars can automatically park by accessing different types of parking, for instance, parallel and perpendicular parking.

How RL works in self-driving cars:

- Environment : The environment in which the autonomous car will be involved, such as the road, other cars, pedestrians and traffic signs.

- State: At what point is the car at the moment.

- Action: The possible actions include accelerating, braking, turning, and lane change.

- Reward: The car has the reward for safe and efficient driving, and punishment for accidents or violating traffic rules.

- Learning: The RL algorithm in the car learns a policy that maps states to appropriate actions, maximizing the cumulative reward over time.

Challenges and future directions:

- Safety: The main challenge for self-driving cars is its safety. The RL algorithms need to be correctly designed and tested in a way that minimizes the risk of accident.

- Scalability: Training RL models for self-driving cars can be computationally expensive. Efficient and scalable RL algorithms are still a topic of research.

- Generalisation: The knowledge of self-driving cars must generalise well to the unseen environment. That’s possible only with robust RL algorithms handling extreme possibilities.

As RL continues to advance, we can expect to see even more sophisticated and capable self-driving cars in the future.

Bonus AI Interview Questions

Behavioral and Practical AI Questions:

How do you approach data cleaning in AI projects?

Explain a real-world AI project you’ve worked on.

How do you handle bias in AI models?

What are the ethical considerations in AI development?

How do you ensure model interpretability in production AI systems?

AI interviews in 2024 require a sound understanding of base and advanced topics. Using the material and subjects covered in this guide, you will be ready for the technological questions and be well equipped to present yourself with full confidence. Whether preparing for your first AI role or simply fine-tuning your hiring process, these questions will help you keep ahead in the fast-changing AI landscape. Practice on a daily basis, keep up with the trend, and you are sure to make it.